Technical Projects

Machine Learning & NLP Projects in Python

Electric Vehicle Charging Demand Estimator

Tools: Python, pandas, scikit-learn, Folium, Kepler.gl, matplotlib, seaborn, Streamlit

Skills Demonstrated: Geospatial data aggregation & visualization, supervised machine learning (classification & regression), data cleaning & preprocessing, feature engineering from temporal and spatial EV data, interactive app development with Streamlit, model evaluation and metric interpretation.

Summary:

The EV Charging Demand Estimator is a data-driven tool designed to help policymakers and energy providers identify underserved areas for EV infrastructure expansion. Using publicly available EV population data, the project estimates where public charging stations are most needed across regions by analyzing EV density, vehicle type, and location-based demand signals.

- Classification model to label high/medium/low demand regions.

- Regression model to predict the expected number of EVs per region.

Visual insights and model outputs were deployed through a user-friendly Streamlit app.

Key Insights:

- Counties with high EV population growth often lack proportional charging infrastructure.

- Urban regions showed non-linear demand trends due to saturation and availability of home chargers.

- Certain suburban and rural counties were flagged as high-opportunity zones for new charging stations.

| Model Type | Algorithm | Accuracy / R² | Key Metrics |

|---|---|---|---|

| Classification | Random Forest Classifier | 0.79 (Accuracy) | F1-score: 0.80 |

| Regression | Random Forest Regressor | 0.89 (R² Score) | RMSE: 0.54 |

Impact:

- Enables data-driven planning for EV infrastructure rollouts.

- Helps local governments and private investors prioritize regions with unmet charging needs.

- Encourages equitable access to EV charging across urban and rural areas.

- Supports sustainability goals by accelerating EV adoption through better infrastructure.

Customer Churn Prediction

Tools: Python, pandas, scikit-learn, imbalanced-learn, seaborn, matplotlib

Skills Demonstrated: EDA, feature engineering, class imbalance handling, model comparison, linear modeling, tree-based modeling, regularization, interpretability, business insight

Summary:

This project involved building an end-to-end machine learning pipeline to predict customer churn using the Telco dataset. The dataset contained information on 7,043 telecom customers in California, with features related to demographics, services used, and account activity. After exploratory analysis, the dataset was cleaned, transformed, and split into linear and tree-based model datasets. Class imbalance (~73% No Churn vs. ~27% Churn) was addressed using multiple resampling strategies, with SMOTE selected for its superior performance.

Key Insights:

- Customers with month-to-month contracts, fiber optic internet, and low tenure were significantly more likely to churn.

- Senior citizens and users who paid via Bank Withdrawal were also at higher churn risk.

- Lasso Regression identified the most impactful features: satisfaction score, online security, phone service, and internet type.

Model Performance Summary:

| Model | Accuracy | Precision (Churn) | Recall (Churn) | ROC AUC |

|---|---|---|---|---|

| Logistic Regression | 93% | 0.87 | 0.87 | 0.97 |

| Ridge Classifier | 95% | 0.92 | 0.87 | 0.97 |

| Lasso Regression | 93% | 0.88 | 0.86 | 0.96 |

| Decision Tree | 95% | 0.90 | 0.90 | 0.93 |

| Random Forest | 95% | 0.98 | 0.84 | 0.98 |

Impact:

The project highlights how machine learning models can help telecom providers predict and proactively manage customer churn. The final Random Forest model offered excellent precision and class separation, making it suitable for prioritizing high-risk customers and designing retention strategies.

Breast Cancer - Survival Analysis & Predictive Modeling

Tools: Python, pandas, lifelines, scikit-learn, xgboost, keras, seaborn, matplotlib

Skills Demonstrated: Survival analysis (Kaplan-Meier, Cox models), classification modeling, clustering, feature interpretation, medical data analysis

Summary:

This project applies survival analysis, machine learning, and unsupervised clustering to the Breast Cancer METABRIC dataset (2,000+ patients). The goal was to analyze patient survival patterns, predict high-risk individuals, and uncover hidden subgroups based on clinical and pathological features. The workflow included data preprocessing, EDA, Kaplan-Meier and Cox regression modeling, binary classification using Random Forest, XGBoost, and Neural Networks, and unsupervised clustering via K-Means.

Key Insights:

- Random Forest and XGBoost models performed best with ROC-AUC scores of 0.79 and accuracy of 72%.

- Neural Networks improved overall accuracy to 74% but showed similar AUC (0.76), indicating marginal gains from deep learning.

- Top predictive features included Tumor Size, Nottingham Prognostic Index, and Age.

- Kaplan-Meier survival curves revealed significant survival differences across tumor stages and treatment types (e.g., hormone therapy).

- K-Means clustering identified 5 distinct patient subgroups with varying treatment patterns and survival outcomes; Cluster 0 showed the lowest survival probability, while Cluster 1 had the highest.

Model Performance Comparison:

| Model | Accuracy | ROC-AUC | Notable Strength |

|---|---|---|---|

| Random Forest | 72% | 0.79 | Balanced accuracy and interpretability |

| XGBoost | 72% | 0.79 | Handles non-linearity and feature interactions well |

| Neural Network (MLP) | 74% | 0.76 | Improved accuracy with deeper architecture |

| Logistic Regression | 70% | 0.74 | Baseline interpretability and feature insights |

Impact:

This project demonstrates the integration of survival statistics, machine learning, and unsupervised clustering to derive actionable insights from clinical datasets. It supports the development of risk stratification tools and highlights the role of data science in precision oncology.

Revenue Prediction & Customer Analytics for Supermarkets

Tools: Python, pandas, scikit-learn, xgboost, keras, statsmodels, Streamlit

Skills Demonstrated: Time series forecasting, regression modeling, deep learning, EDA, feature engineering, dashboard development, business analytics

Summary:

This end-to-end project forecasts next-day revenue across supermarket categories using sales data from five major UK retailers. The project includes extensive feature engineering (e.g., lag features, cyclical encodings, rolling statistics), EDA, and predictive modeling using machine learning, deep learning, and time series approaches. A Streamlit dashboard was also built to make insights and predictions accessible to non-technical users. The models were evaluated using MAE, RMSE, and R² metrics.

Key Insights:

- Random Forest delivered strong predictive performance with low MAE and RMSE, making it ideal for stable retail forecasting.

- XGBoost achieved the highest R² score (0.91) and performed best overall, capturing complex interactions between price, product type, and time-based features.

- LSTM models captured sequential sales trends but had higher variance, particularly on test data.

- ARIMA was the weakest performer in this multivariate setting, confirming the advantages of machine learning in retail forecasting tasks.

Model Performance Comparison:

| Model | R² (Test) | MAE (Test) | RMSE (Test) |

|---|---|---|---|

| Linear Regression | 0.85 | 5.37 | 15.78 |

| Random Forest | 0.90 | 2.39 | 11.08 |

| XGBoost | 0.91 | 3.76 | 13.55 |

| ANN | 0.90 | 3.60 | 11.68 |

| LSTM | 0.85 | 5.17 | 16.43 |

| ARIMA | 0.67 | 6.40 | 7.40 |

Impact:

This project provides a practical framework for retail revenue forecasting using interpretable and high-performing models. The results can help supermarkets reduce inventory waste, improve pricing decisions, and optimize daily operations. The accompanying Streamlit app empowers stakeholders to explore trends, compare product pricing, and visualize performance across brands and categories.

Data Science Projects in R

Macroeconomic Forecasting with VAR Models in R

Tools: R, vars, urca, dplyr, ggplot2

Skills Demonstrated: Time series stationarity analysis, ARIMA modeling, VAR modeling, cointegration testing, impulse response analysis, forecast error variance decomposition, data visualization

Summary:

This project applies time series forecasting techniques in R to analyze and predict movements in four key macroeconomic indicators: the Consumer Price Index (CPI), Producer Price Index (PPI), PPI for Finished Goods (PPIFG), and PPI for Finished Consumer Foods (PPIFCF). The analysis involved testing for stationarity and cointegration, fitting ARIMA and Vector Autoregressive (VAR) models, and evaluating model performance using forecast error metrics (MSE, MAE). Impulse response functions and forecast error variance decomposition (FEVD) were used to analyze interdependencies and the effects of shocks on each series.

Key Insights:

- All four series were found to be integrated of order one (I(1)), requiring first differencing for stationarity.

- Optimal lag lengths for VAR models were determined using AIC, with VAR(6) providing the best forecasting performance overall.

- Impulse response analysis showed CPI was significantly influenced by lagged values of PPI and PPIFG, while PPI responded to shocks in CPI and PPIFG with lagged, oscillating behavior.

- FEVD analysis confirmed CPI’s dominance in explaining its own forecast variance and PPI’s high sensitivity to CPI innovations.

Model Performance Comparison (Forecasting Accuracy):

| Model | Variable | MSE | MAE |

|---|---|---|---|

| VAR(6) | CPI | 0.145 | 0.304 |

| VAR(6) | PPI | 1.820 | 1.107 |

| VAR(6) | PPIFG | 1.116 | 0.935 |

| VAR(6) | PPIFCF | 2.377 | 1.356 |

| VAR(2) | CPI | 0.313 | 0.494 |

| VAR(2) | PPI | 1.089 | 0.819 |

| VAR(2) | PPIFG | 1.105 | 0.927 |

| VAR(2) | PPIFCF | 2.339 | 1.113 |

| VAR(1) | CPI | 0.137 | 0.311 |

| VAR(1) | PPI | 1.253 | 0.882 |

| VAR(1) | PPIFG | 0.988 | 0.896 |

| VAR(1) | PPIFCF | 2.002 | 1.058 |

Impact:

This project demonstrates the power of multivariate time series models in capturing macroeconomic relationships and forecasting inflation-related indices. It also shows the importance of model selection, diagnostics, and interpretability in economic forecasting. The results have implications for central banks, policy analysts, and economists interested in inflation dynamics and inter-market linkages.

Brain Stroke Prediction

Tools: R, dplyr, ggplot2, ROSE, caret

Skills Demonstrated: Data cleaning, class imbalance handling, model comparison, feature importance analysis, binary classification

Summary:

This R-based project focuses on predicting the likelihood of a stroke in patients using demographic and health-related data. The dataset contains 5,110 records and 12 features, including age, glucose levels, BMI, and smoking status. Preprocessing involved handling 210 missing values, encoding categorical variables, and normalizing continuous features. Multiple classification algorithms were used to assess model performance, including logistic regression, decision trees, and random forest classifiers.

Key Insights:

- Initial model performance was hindered by class imbalance, prompting the use of ROSE and a combined under/over sampling strategy for rebalancing.

- Random Forest consistently outperformed Logistic Regression and Decision Tree classifiers under both sampling strategies.

- Feature importance plots from Random Forest highlighted age, glucose level, and hypertension as key predictors of stroke risk.

Model Performance Comparison:

| Sampling Technique | Model | Accuracy | Recall | AUC Score |

|---|---|---|---|---|

| ROSE | Random Forest (no feature selection) | 0.78 | 0.79 | 0.794 |

| Under + Over Sampling | Random Forest (no feature selection) | 0.916 | 0.94 | 0.84 |

Impact:

This project demonstrates how machine learning can support early detection of stroke risk factors using health and lifestyle data. It highlights the role of sampling techniques in improving model performance and reinforces the importance of data-driven tools in public health prediction and prevention efforts.

Data Visualization Projects

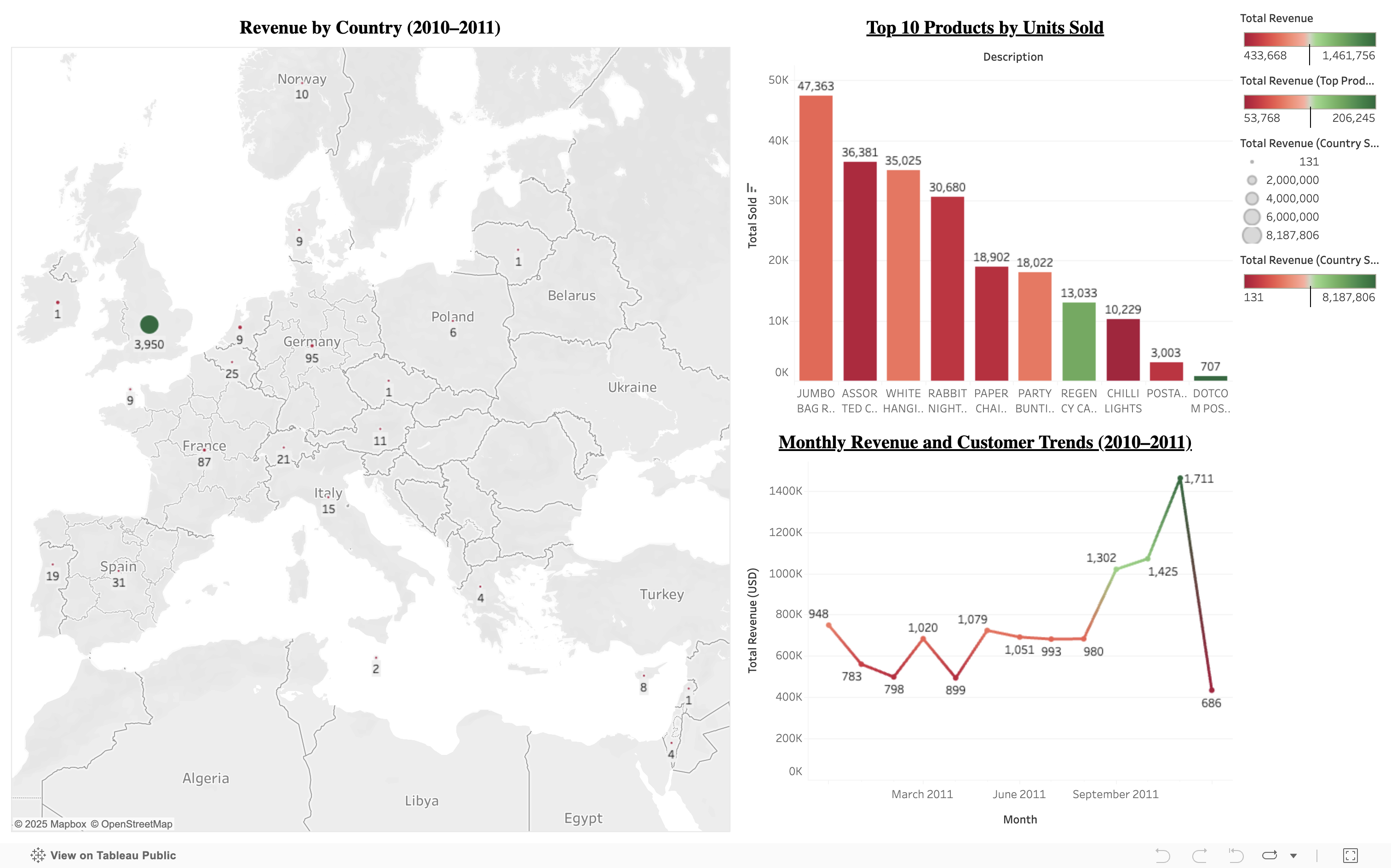

E-Commerce Analytics Dashboard (UK Retail Sales)

Tools: dbt, DuckDB, Python, Tableau

Skills Demonstrated: Data modeling, SQL transformations, dashboard design, KPI tracking, end-to-end data pipeline development

Summary:

This project demonstrates a complete analytics workflow starting from raw transactional data to an interactive dashboard. Using a UK-based e-commerce dataset (2010–2011), I modeled and transformed the data using dbt and DuckDB, queried the final tables using Python, and visualized insights in Tableau Public.

Key Insights:

- Revenue peaked in November–December, revealing strong seasonal trends.

- Top products included gift sets and vintage items with high repeat purchases.

- The UK dominated revenue generation, followed by the Netherlands and Germany.

- Customer segmentation showed a small group of high-value buyers contributing disproportionately to sales.

Impact:

This dashboard supports retail decision-making by tracking KPIs like monthly revenue, top-performing products, customer behavior, and geographic distribution. It reflects the real-world utility of data modeling tools like dbt combined with storytelling through Tableau.

📍 View interactive dashboard on Tableau Public

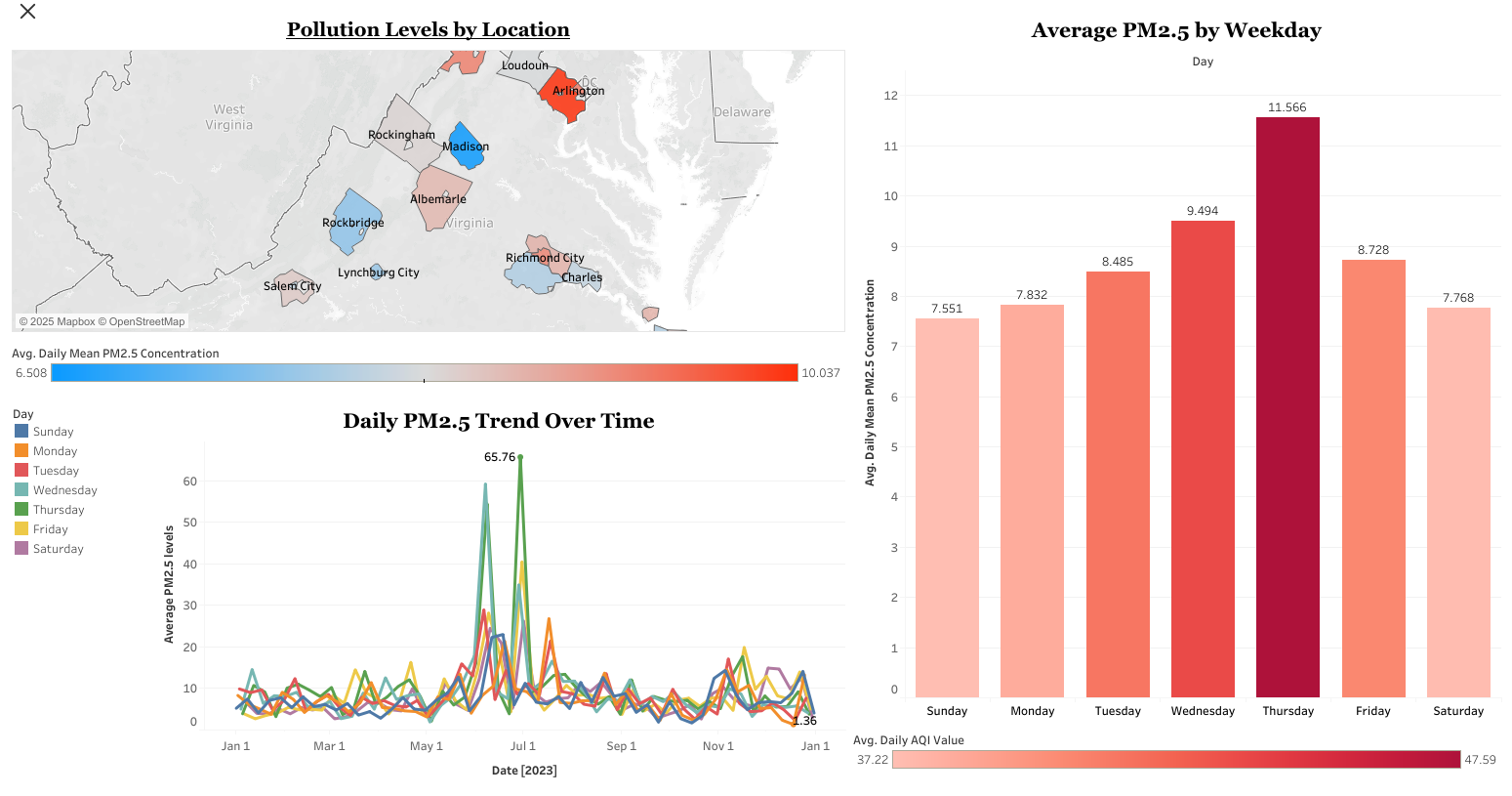

Virginia Air Quality Dashboard

Tools: Excel, Tableau

Skills Demonstrated: Data cleaning, visualization design, public health analysis, storytelling

Summary:

Built an interactive Tableau dashboard to analyze PM2.5 pollution levels across Virginia using data from the EPA. The dashboard visualizes daily, weekly, and county-level air quality trends, making it easier for policymakers and the public to identify pollution spikes and geographic hotspots.

Key Insights:

- Identified consistent midweek spikes in PM2.5 pollution across multiple counties.

- Found higher pollution levels in urban counties compared to rural areas.

- Enabled interactive exploration of air quality trends by time period and location.

Impact:

The dashboard improves public awareness and supports data-driven decisions in environmental planning and health policy.

📍 View interactive dashboard on Tableau Public

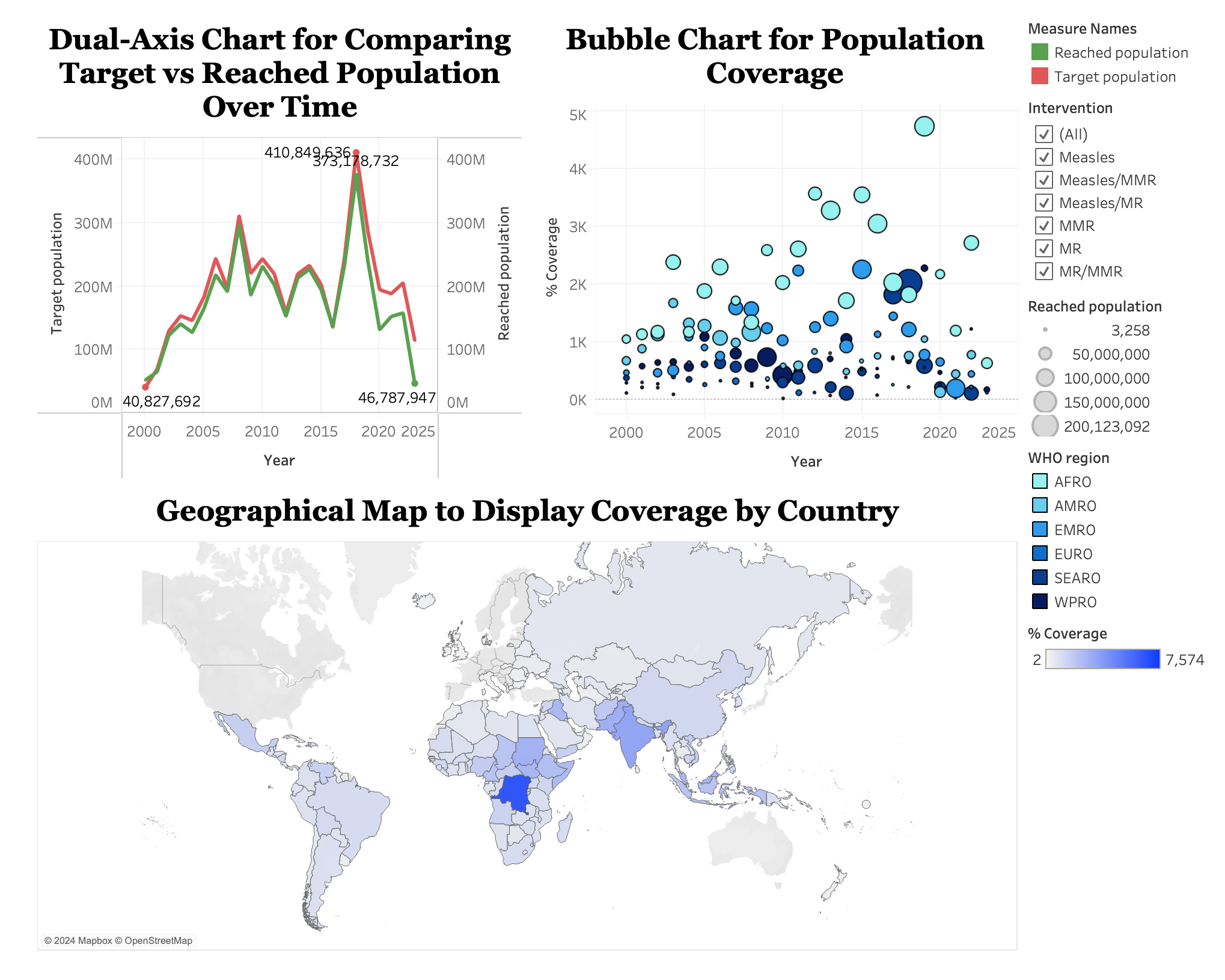

Measles & Rubella Immunization Activities

Tools: Excel, Tableau

Skills Demonstrated: Data preprocessing, trend analysis, interactive dashboard design, public health visualization

Summary:

Built an interactive Tableau dashboard to explore global measles and rubella immunization efforts from 2000 to 2024. The dashboard visualizes coverage trends, regional disparities, and campaign timelines using various interactive charts.

Key Insights:

- Highlighted regions with consistently suboptimal immunization coverage, guiding targeted public health interventions.

- Identified temporal trends in vaccination effectiveness across intervention types and WHO regions.

- Enabled dynamic data exploration through filters and visual drill-downs, improving accessibility and usability of global immunization data.

Impact:

The dashboard empowers public health organizations and planners to make informed, data-driven decisions by identifying at-risk populations and optimizing future immunization strategies.

📍 View interactive dashboard on Tableau Public

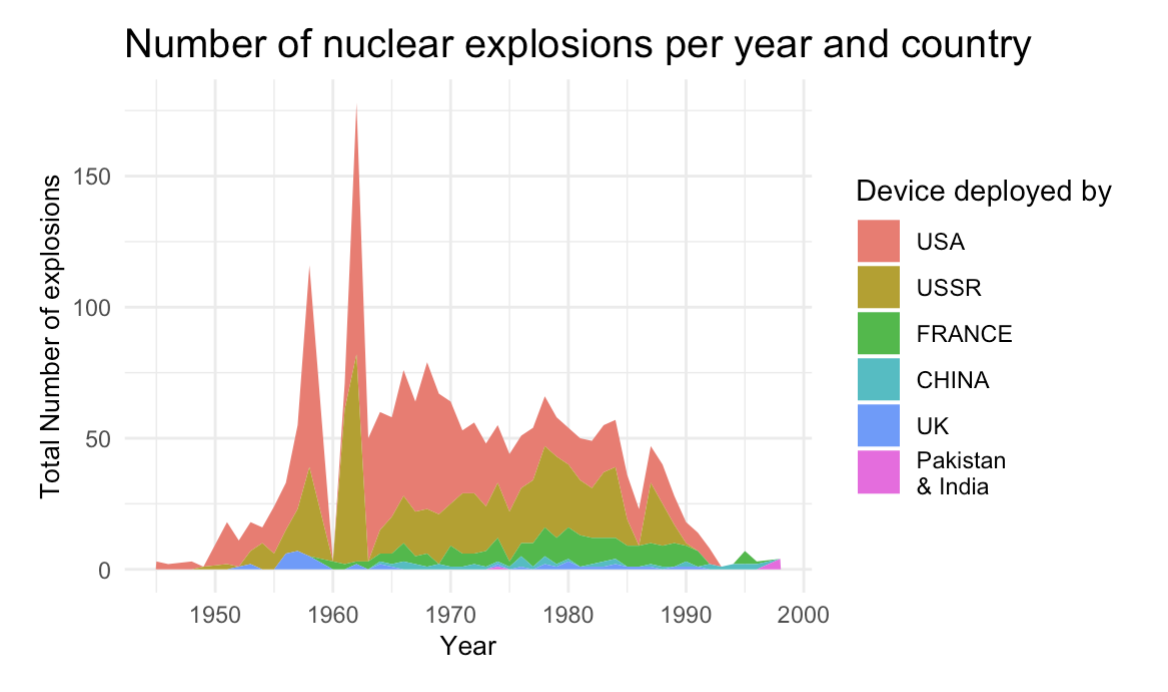

Visualizing the History of Global Nuclear Tests (1945–1998)

Tools: R, ggplot2, dplyr, lubridate, sf

Skills Demonstrated: Data wrangling, geospatial visualization, time series analysis, historical trend analysis, public data storytelling

Summary:

This data visualization project explores the global history of nuclear testing from 1945 to 1998, focusing on tests conducted by the United States and the Soviet Union. Using R, the analysis covers detailed attributes such as geographic location, type, depth, and estimated yield of each test, along with its purpose and responsible organization. The visualizations highlight the escalation of nuclear activity during the Cold War and provide historical context to global arms development and policy shifts.

Key Insights:

- Revealed a concentration of nuclear tests during peak Cold War periods, particularly by the U.S. and USSR between the 1950s and 1980s.

- Identified geographic clustering of test sites (e.g., Nevada and Semipalatinsk), shedding light on strategic testing zones.

- Showed changes in testing methods over time, with shifts from atmospheric to underground testing aligned with evolving international treaties.

Impact:

The project provides a historical lens through which researchers, historians, and the public can explore the scale, timing, and geopolitical implications of nuclear weapons testing. It enhances understanding of 20th-century military history through accessible and data-driven visuals.

Technical Portfolio Website

Tools: GitHub Pages, Jekyll, SCSS, Markdown, HTML

Skills Demonstrated: Static site generation, technical writing, UI customization, documentation, Git-based version control

Summary:

This portfolio website was built using Jekyll and GitHub Pages as a fully customizable static site. It showcases technical projects across data science, machine learning, and analytics, while also demonstrating front-end development skills through custom styling and layout design. Core pages such as index.md and experience.md were created and managed directly using Markdown and HTML.

Key Features:

- Structured navigation for Data Visualization, Machine Learning, NLP, R Projects, and Time Series sections.

- Customized layout based on Jekyll's Slate theme, extended with personalized SCSS styling.

- Responsive images and justified content layout for a clean, professional appearance.

- Interactive buttons linking between internal project pages.

- Uniform styling across project entries using HTML-based tables and consistent formatting across Markdown files like

index.mdandexperience.md.

Impact:

This site not only presents project work but serves as a technical artifact in itself — demonstrating end-to-end static site development, version control, layout design, and content management for professional documentation and portfolio presentation.